Big data or big hype: a MERL Tech debate

by Shawna Hoffman, Specialist, Measurement, Evaluation and Organizational Performance at the Rockefeller Foundation.

Both the volume of data available at our fingertips and the speed with which it can be accessed and processed have increased exponentially over the past decade. The potential applications of this to support monitoring and evaluation (M&E) of complex development programs has generated great excitement. But is all the enthusiasm warranted? Will big data integrate with evaluation — or is this all just hype?

A recent debate that I chaired at MERL Tech London explored these very questions. Alongside two skilled debaters (who also happen to be seasoned evaluators!) – Michael Bamberger and Rick Davies – we sought to unpack whether integration of big data and evaluation is beneficial – or even possible.

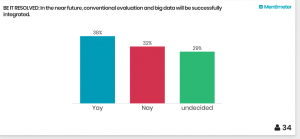

Before we began, we used Mentimeter to see where the audience stood on the topic:

Once the votes were in, we started.

Both Michael and Rick have fairly balanced and pragmatic viewpoints; however, for the sake of a good debate, and to help unearth the nuances and complexity surrounding the topic, they embraced the challenge of representing divergent and polarized perspectives – with Michael arguing in favor of integration, and Rick arguing against.

“Evaluation is in a state of crisis,” Michael argued, “but help is on the way.” Arguments in favor of the integration of big data and evaluation centered on a few key ideas:

- There are strong use cases for integration. Data science tools and techniques can complement conventional evaluation methodology, providing cheap, quick, complexity-sensitive, longitudinal, and easily analyzable data.

- Integration is possible. Incentives for cross-collaboration are strong, and barriers to working together are reducing. Traditionally these fields have been siloed, and their relationship has been characterized by a mutual lack of understanding of the other (or even questioning of the other’s motivations or professional rigor). However, data scientists are increasingly recognizing the benefits of mixed methods, and evaluators are seeing the potential to use big data to increase the number of types of evaluation that can be conducted within real-world budget, time and data constraints. There are some compelling examples (explored in this UN Global Pulse Report) of where integration has been successful.

- Integration is the right thing to do. New approaches that leverage the strengths of data science and evaluation are potentially powerful instruments for giving voice to vulnerable groups and promoting participatory development and social justice. Without big data, evaluation could miss opportunities to reach the most rural and remote people. Without evaluation (which emphasizes transparency of arguments and evidence), big data algorithms can be opaque “black boxes.”

While this may paint a hopeful picture, Rick cautioned the audience to temper its enthusiasm. He warned of the risk of domination of evaluation by data science discourse, and surfaced some significant practical, technical, and ethical considerations that would make integration challenging.

First, big data are often non-representative, and the algorithms underpinning them are non-transparent. Second, “the mechanistic approaches offered by data science, are antithetical to the very notion of evaluation being about people’s values and necessarily involving their participation and consent,” he argued. It is – and will always be – critical to pay attention to the human element that evaluation brings to bear. Finally, big data are helpful for pattern recognition, but the ability to identify a pattern should not be confused with true explanation or understanding (correlation ≠ causation). Overall, there are many problems that integration would not solve for, and some that it could create or exacerbate.

The debate confirmed that this question is complex, nuanced, and multi-faceted. It helped to remind that there is cause for enthusiasm and optimism, at the same time as a healthy dose of skepticism. What was made very clear is that the future should leverage the respective strengths of these two fields in order to maximize good and minimize potential risks.

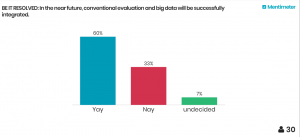

In the end, the side in favor of integration of big data and evaluation won the debate by a considerable margin.

The future of integration looks promising, but it’ll be interesting to see how this conversation unfolds as the number of examples of integration continues to grow.

Interested in learning more and exploring this further? Stay tuned for a follow-up post from Michael and Rick. You can also attend MERL Tech DC in September 2018 if you’d like to join in the discussions in person!

You might also like

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors

-

A visual guide to today’s GenAI landscape

-

Register now for the NLP-CoP Ethics and Governance Working Group Meeting on April 18th