No Data, No Problem: Extracting Insights from Data-Poor Environments

by Jonathan Tan

In data-poor environments, what can you do to get what you need? For Arpitha Peteru and Bob Lamb of the Foundation for Inclusion, the answer lies at the intersection of science, story, and simulation.

The session, “No Data, No Problem: Extracting Insights from Data Poor Environments” began with a philosophical assertion: all data is qualitative, but some can be quantified. The speakers were making the argument that the processes we use to extract insights from data are fundamentally influenced by our personal assumptions, interpretations and biases, and misusing data without considering those fundamentals can produce unhelpful insights. As an example, they cited an unnamed cross-national study of fragile stages that committed several egregious data sins:

- It assumed that household data aggregated at the national level was reliable.

- It used an incoherent unit of analysis. Using a country-level metric in Somalia, for example, makes no sense because it ignored the qualitative differences between Somaliland and the rest of the country.

- It ignored the complex web of interactions among several independent variables to produce pairwise correlation metrics that themselves made no sense.

For Peteru and Lamb, the indiscriminate application of data analysis methods without understanding the forces behind the data is a failure of imagination. They spoke about the Foundation for Inclusion’s approach to social issues by their appreciation for complex systems. They illustrated the point with a demonstration: when you pour water from a pitcher onto a table, the rate of water leaving the pitcher exactly matches the rate of water hitting the table. If you were to measure both and looked only at the data, the correlation is 1 and you could conclude that the working mechanism was that the table was getting wet because it was leaving the pitcher. But what happens when there are unobserved intermediate steps? What if, for instance, the water was flowing into a cup on the table, which had to overflow before hitting the table? Or what if water was being poured into a balloon, which had to cross a certain threshold before bursting and wetting the table? The data in isolation would tell you very little about how the system actually worked.

What can you do in the absence of good data? Here, the Foundation for Inclusion turns to stories as a source of information. They argue that talking to domain experts, reviewing local media and gathering individual viewpoints can help by revealing patterns and allowing researchers to formulate potential causal structures. Of course, the further one gets from the empirics, the more uncertainty there must be. And that can be quantified and mitigated with sensitivity tests and the like. Peteru and Lamb’s point here was that even anecdotal information can give you enough to assemble a hypothesized system or set of systems that can then be explored and validated – by way of simulation.

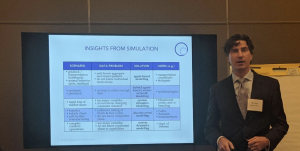

Simulations were the final piece of the puzzle. With researchers seeing increasing access to the hardware and computing knowledge necessary to create simulations of complex systems – systems based on information from the aforementioned stores – the speakers argued that simulations were an increasingly viable method of exploring stories and validating hypothesized causal systems. Of course, there was no one-size-fits-all: they discussed several types of simulations – from agent-based models to Monte Carlo models – as well as when each might be appropriate. For instance, health agencies today already make use of sophisticated simulations to forecast the spread of epidemics, in which collecting sufficient data would simply be too slow to act upon. By simulating thousands of potential outcomes from varying key parameters in the simulations, and systematically eliminating the models that had undesirable outcomes or those that relied on data with high levels of uncertainty, one could, in theory, be left with a handful of simulations whose parameters would be instructive.

Simulations were the final piece of the puzzle. With researchers seeing increasing access to the hardware and computing knowledge necessary to create simulations of complex systems – systems based on information from the aforementioned stores – the speakers argued that simulations were an increasingly viable method of exploring stories and validating hypothesized causal systems. Of course, there was no one-size-fits-all: they discussed several types of simulations – from agent-based models to Monte Carlo models – as well as when each might be appropriate. For instance, health agencies today already make use of sophisticated simulations to forecast the spread of epidemics, in which collecting sufficient data would simply be too slow to act upon. By simulating thousands of potential outcomes from varying key parameters in the simulations, and systematically eliminating the models that had undesirable outcomes or those that relied on data with high levels of uncertainty, one could, in theory, be left with a handful of simulations whose parameters would be instructive.

The purpose of data collection is to produce useful, actionable insights. Thus, in its absence, the Foundation for Inclusion argues that the careful application of science, story, and simulation can pave the way forward.

You might also like

-

Join the AI and African Evaluation Working Group Meet ‘n’ Mix Session on May 7!

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors

-

A visual guide to today’s GenAI landscape