We’ve (mostly) banned AI assistants from NLP Community of Practice events. Here’s why.

This post was co-authored by Isabelle Amazon-Brown and Linda Raftree who both work with the MERL Tech Initiative and the Natural Language Processing Community of Practice (NLP-CoP).

In the past couple of years, almost every virtual meeting we’ve attended has included invisible, unobtrusive guests, sometimes even with cute names, and with a theoretically useful role: to take meeting notes. A lot of the time, they are taking notes on behalf of those who can’t attend, but they might also accompany attendees.

These guests are AI note-taking assistants, and the NLP Community of Practice recently decided to ban them from our virtual events, with some important exceptions. Here’s why we’ve done so, as well as a few tips on how you can navigate the acceptance, denial or requesting of note-taking assistants in meetings.

Your personal, pre-meeting conversations might be shared with all the meeting registrants

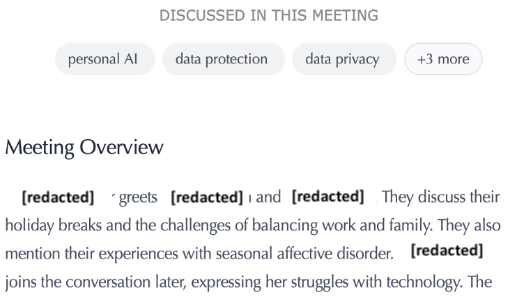

Earlier this year, someone tried out an AI note taker at a casual work meeting, because some of us were not able to be at the meeting. About an hour after the meeting, everyone who had registered for the meeting (even if they didn’t attend) got this meeting summary:

The meeting was among friendly professional partners, so it wasn’t huge deal to have personal conversations like this shared around without express permission. But what if it had been a bigger meeting, with people the meeting attendees didn’t know well? It can be surprising and uncomfortable for everyone when this kind of thing happens, and it’s easy to forget that we’re being surveilled by these little fellows.

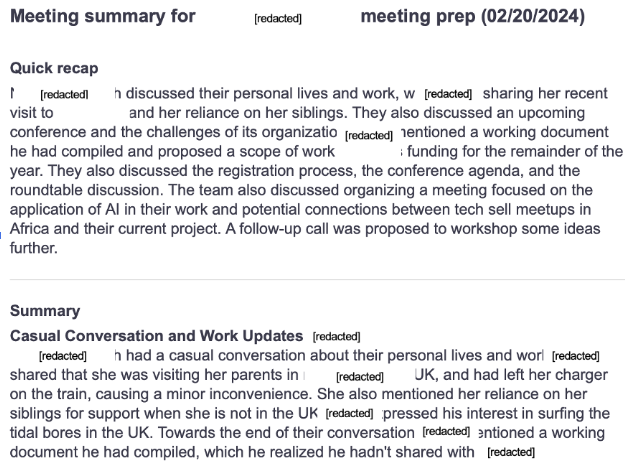

Here’s another example:

It made us wonder what the longer term consequences of AI note takers might be? Will people move to unofficial channels for certain work conversations because all their Teams and Zoom meetings are being recorded and transcribed by AI note takers with little consent or control over what is captured and shared? Will we start to avoid having important inter-personal conversations at our meetings? What does that mean for bonding among team members and partner organizations? Once people realize that their chit chat is being transcribed and shared around, will people stop chitchatting?

Conversations become dull and extractive

Another challenge with the increase in AI note takers rather than humans attending meetings and online events is that quality of conversations tends to become a bit dull. As an organization that does a lot of convening, the NLP Community of Practice relies on lively, interesting conversations to make meetings worth attending. We understand that people may have to miss a meeting now and then for a variety of reasons, but sending an AI note taker can feel extractive for those who are attending and whose ideas are being lifted from the meeting with no reciprocal conversation or knowledge sharing, no consent and no real idea who is doing this and for what purpose. While AI note-takers are useful for some folks (as we note below), if everyone starts using them, we’re certain that the quality of our discussions and learning will diminish.

We’ve seen similar push back from people on list serves. Recently, a person was suspected of writing an AI-generated response to a discussion on a list serve that we subscribe to. Others quickly voiced their discontent, saying that they joined the list for the quality of the discourse and that if they saw posts suspected of being AI-generated, they would unsubscribe from the list.

The UX of note-taking software assumes consent

When note-taking assistants ‘join’ a meeting, consent to join, and thus to start processing and storing data, is often granted by whoever happens to be in the room first – and all it takes is the click of a button. People who arrive at the meeting later have had their ability to consent taken away from them. Especially with freemium services, meeting audio is processed and stored in the cloud, making it vulnerable to unauthorized access, misuse, or hacking – and someone is probably making money from your data (again).

In an ideal world, this is what would happen instead:

- What happens to the data recorded would be clearly and succinctly explained as part of this process (not hidden behind an easy-to-miss link)

- Every single participant would be able to grant/deny their consent – and only with a unanimous vote to consent would the assistant be allowed to proceed.

- Every single participant would be able to request access, and request deletion, of the data recorded.

Given we run events with sometimes hundreds of participants, this is clearly not practical, even if the software enabled this process. Protecting our members’ privacy and minimizing the collection of data is a key principle of the NLP Community of Practice’s Charter. As such, the leadership team decided that we would take the opposite approach of note-taking assistants, and assume no-consent on behalf of our meeting participants.

Important accessibility exceptions

Of course, in the process of making this decision, we considered the accessibility needs of our members too. AI note takers are useful for those with hearing impairments, but also for those whose native tongue is not English (our meetings are held in English), or whose English is not attuned to certain regional accents, or those with carpal tunnel syndrome or other types of injury. Similarly, neurodiverse individuals may benefit from using note-taking tools to help them process information in a way that suits their needs, both during and after the meeting.

We decided to handle this eventuality by including a statement in our Zoom meeting registration process, alerting attendees that note-taking software is discouraged, and asking them to request in advance if they would like to use one. If we see AI note takers that we haven’t been notified about in advance, we boot them out of the meeting.

As the comments on this great post testify, this is definitely not a perfect solution: by asking attendees with specific needs to identify themselves, we may also be denying them their own right to privacy, and/or making them feel othered and upset. As this commentator shared (on a public channel) “I have ADHD and having a transcript and audio to refer to helps me with recall. I worry that if the answer is “no”, I’ll have to explain that it’s an ADHD tool or if I accept the “no” I’ll be anxious throughout the entire call.”

Suffice it to say, we don’t ask many questions if someone advises us that they will bring an AI note taker, but we felt like adding a bit of friction to the note taking decision would be OK. In taking a ‘no-consent assumed’ approach, but including a discrete option to request use of note-taking software, we’ve at best achieved a wobbly middle-ground in an attempt to balance the rights and needs of various groups – we welcome input from our members and others to help us refine this approach.

How we can do our bit as individuals

There are a few things we could all do on an individual level to stem the tidal-wive of AI-related intrusions we’re experiencing daily, and to simultaneously treat others’ desire or need to use note takers with respect.

- Don’t use AI note takers unthinkingly. Many people are allowing their chosen software to auto-invite itself to any meeting they’re attending, whether or not they’re there to check in with other participants on its use. If you really need to use one, send a line before the meeting to specifically request it.

- If you need to use an AI note taker, opt for versions where recordings/transcripts are locally hosted.

- Do include a line on the use of AI note takers in meeting invites, if it feels relevant, and invite discussion.

- Learn how to say no. It can be awkward being that one person saying no when everyone else either said yes, or doesn’t care. Choose a simple statement like “Can we switch off the note-taking software?”. There is no need to apologize, or explain why. The more we hear ourselves and others speaking up, the more we will normalize the process.

- Don’t question it. If someone declines note-taking software in a meeting you’re running, don’t say “why”. Ask if anyone else objects, without asking for a reason, and then act accordingly.

- Militate for consent culture. Commentators on this issue have rightly pointed out that saying no, in situations where more significant power-dynamics are at play (like within larger organizations, or in meetings with clients or donors), is sometimes not an option. Even if you might not feel like you have a choice in the moment, you do have the choice to provide feedback to your organization that consent culture needs to be improved on.

- Don’t assume the worst. When we’re hounded by data and privacy anxieties, including those prompted by legitimately unthinking or malicious behaviors, we can sometimes take a polarized position. Try to assume the best, not the worst, in fellow meeting guests who may have their own legitimate reasons for use.

- Share meeting recordings and transcriptions if needed. It’s within our control to start and stop recordings at meetings, enabling for chit chat and personal bonding at the start of meetings. Recordings can be edited later by meeting hosts. Recordings can also be paused if someone asks to be off the record. After a meeting, transcripts can be shared if requested, making the use of AI note takers somewhat obsolete if the purpose is for simple note taking. For our meetings, we also ask for consent to record during the sign up process, and we keep a record of that consent as part of our consent tracing.

Will our position on AI note takers evolve?

None of these solutions to the dilemma is perfect, and we imagine our stance on AI note takers will evolve with time – and hopefully so too will the consent processes of the software. We’d love to know – how do you handle this phenomenon? Do you have any go-to phrases you use to easily deny consent or request use?

Leave a Reply

You might also like

-

“Hey ChatGPT, what is missing from our reports?”: A Generative AI Use Case for Public Sector MERL

-

New resource: Tool for Assessing AI Vendors

-

What does the data say? Join us for a roundtable on the emerging evidence on GenAI for Social & Behavioral Change

-

Event: “Should we be using AI right now?” A conversation about the ethical challenges of the current moment

I appreciate this post so much. I had just put out a post addressing bot-bans as I’ve been in so many discussions about bots lately. I landed in a similar place as you for a community of practice I am facilitating in terms of not allowing outside bots. But I’m trying to lean into my responsibility as meeting host to meet the accessibility needs by allowing my own bot for transcript generation with transparency about when it will and won’t be used. I also really wish there was a better way of gaining consent and having it be modifiable in real time. Hopefully this will be a future development. Here’s my post if you’re interested: https://missionbloom.substack.com/p/to-bot-or-not-why-blocking-ai-meeting

Thanks so much for the feedback Valerie, and for the link to your brilliant post – all great tips which I’ll be sharing with the NLP CoP / MTI team!

Absolutely great post. I think we’re having similar discussions at Caribou. We’re not fans of notetakers at external meetings, especially when they join before a human does! But they may be useful as notetakers for internal meetings? And as you say, a recording and transcript allows for agency over interpretations.

Agreed, we did feel the need to take a more intransigeant stance to begin with, but it will definitely evolve over time!

This is brilliant article, its new experience for me, however, it work disseminating, to different platforms for adaption. Big up Isabelle!

Thank you for the kind feedback Lawrence, I’m glad you found it interesting!