New resource: Tool for Assessing AI Vendors

Authors: Grace Higdon and Linda Raftree

Revolution Impact and The MERL Tech Initiative have teamed up to create an AI Vendor Assessment Tool designed for decision-makers who work in the international development, humanitarian, and social impact sectors and who need to assess AI vendors but may not have specialized knowledge in AI systems. These could be program managers, MERL professionals, and/or technical staff who are considering AI tool procurement.

Who and what is the assessment tool for?

The tool aims to provide a straightforward, criteria-based analysis of vendor credibility and implementation track record and to support conversations with downstream developers and deployers.

It focuses on requirements to explore when selecting an AI vendor or partner. These vendors or partners may have a specific tool they are marketing, or they might offer bespoke AI-enabled services. The assessment tool aims to partially address both scenarios, with a particular focus on ‘explainable’ AI, error detection and validation processes, and mechanisms for human review and override.

The assessment tool can help to guide conversations with AI Vendors about what exactly a tool or product can and cannot do. It assumes either some in-house IT expertise or small teams willing to engage with technical aspects around security. It also tries to dig into some of the core practical and ethical issues that are within the control of an AI Vendor to alter. The assessment tool may also be a useful document for a potential vendor to understand an organization’s needs and ethical requirements for using AI.

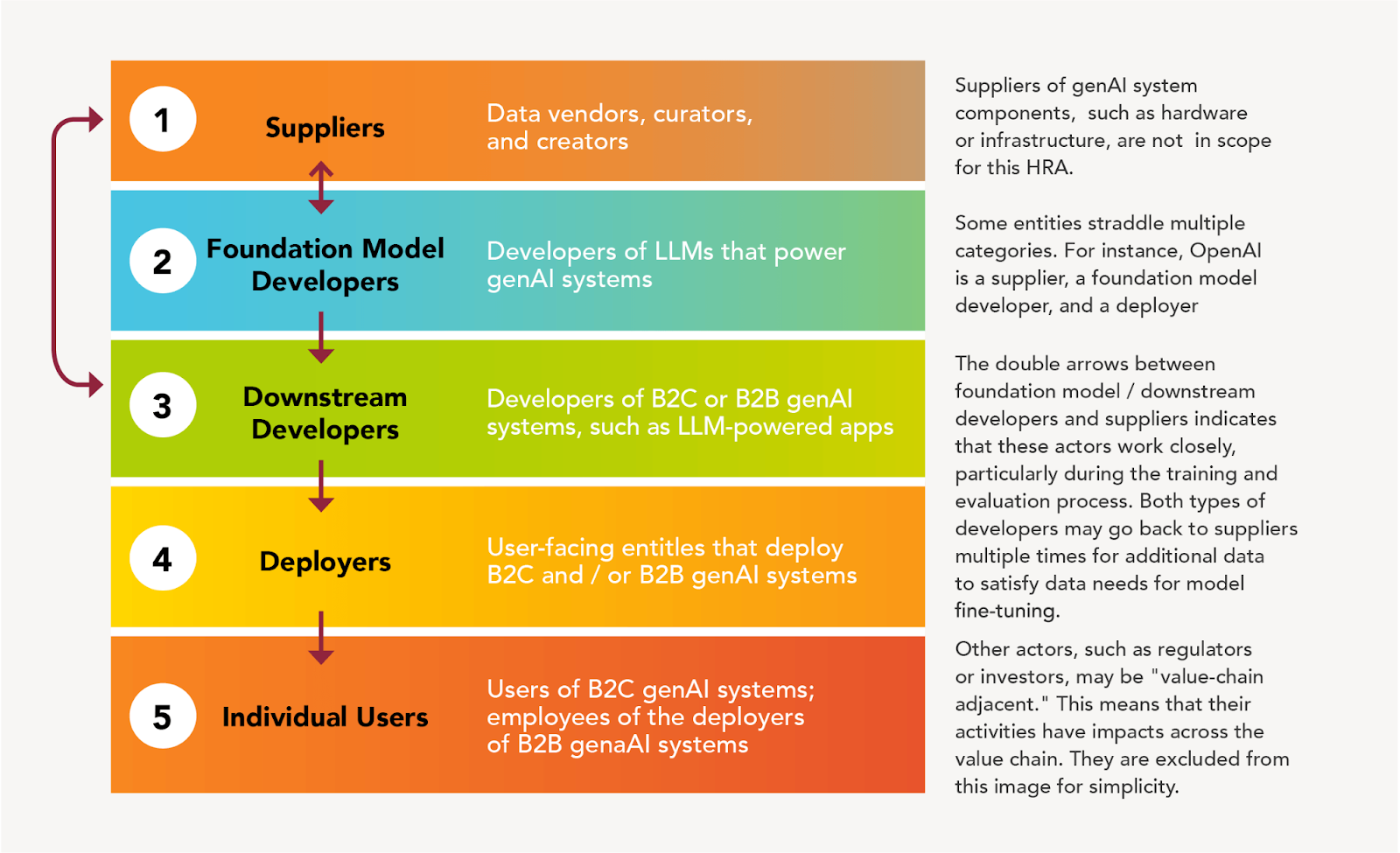

(Note: the assessment tool is not intended to provide orientation on how to assess underlying AI models (‘foundation models), as these processes are largely inaccessible to the international development and social impact sectors. See below for a nice graphic of the various levels of AI players).

Why did we create this assessment tool?

As we’ve engaged with the Natural Language Processing Community of Practice (NLP-CoP), members have lamented that capacity to assess and vet AI vendors and tools in the social sector and among the Evaluation community is low. There is express interest in sector-focused frameworks and assessment tools that help MERL professionals and those working in civil society organizations to assess AI tools and services and guidance on how to engage in conversations with AI vendors.

While we have experienced the benefits of AI use in specific, bounded use cases, we have also witnessed concerning patterns of inflated claims about AI capabilities coming from the wider tech industry, large commercial companies, and some AI vendors who are approaching non-profit organizations to sell their AI tools. Our community members are struggling to identify ethical AI tools and vendors and to differentiate hype from reality when choosing tools at a practical level. This can lead to wasted resources due to unreliable or irrelevant outputs that contain hallucinations, fabrications, and at times harmful biases. At a time where funding is shrinking, and automation is being celebrated by many, it’s critical that social sector decision makers enhance their critical AI literacy so that they can make smart and informed choices about AI adoption.

A host of ethical challenges with AI has been documented (intellectual property theft, exploitation of data labelers, eroding democracy, algorithmic bias, climate impacts, the global shift away from AI safety and, ethics, and more.) At the same time, AI is becoming ubiquitous, and examples of where it can offer value are emerging as the sector experiments and adopts AI for different purposes. In this context, we have adopted a “least worst” mindset and remain committed to surfacing and sharing the complexities regarding AI development and use.

The assessment tool is an effort to support community members navigate some of these challenges and to provide a straightforward, criteria-based analysis of vendor credibility and implementation track record.

This is the first version of this assessment tool, and we appreciate those who supported us with peer review. We plan to update it in the future as the AI space is one of rapid change! If you have suggestions or ideas on how this resource could be improved, please reach out to us at hello@merltech.org.

You might also like

-

What does the data say? Join us for a roundtable on the emerging evidence on GenAI for Social & Behavioral Change

-

Event: “Should we be using AI right now?” A conversation about the ethical challenges of the current moment

-

Join us on May 15th for an AI+Africa Working Group Meeting: Shaping Our Priorities!

-

Event Recap: Tests of Large and Small Language Models on Common Evaluation Tasks