It’s the end of 2024: Where are we with GenAI for MERL?

Some ten years after the emergence of MERL Tech, I thought it might be fun to share some observations of the evolution of the space, from the early days when people were first thinking about digital data collection to today when we’re seeing generative AI everywhere we look.

The first five years of MERL Tech

The MERL Tech sector has been around since at least 2014, when we first started using the term ‘MERL Tech’ to describe what was happening at the intersection of monitoring, evaluation, research and learning (MERL) and technology. Back when we started noticing that something was happening with tech and MERL, things like crowdsourcing, data collection on mobile devices, and open data for better accountability were in vogue. Michael Bamberger and I wrote about this in an early landscaping paper called Emerging opportunities: Monitoring and evaluation in a Tech Enabled World.

The landscape report spurred the first MERL Tech Conference in 2014 and a series of follow-on conferences between 2014 and 2019. Out of the conferences, a MERL Tech Community emerged — a confluence of people and discussions at the intersection of technology and MERL in international development, social impact, and humanitarian initiatives.

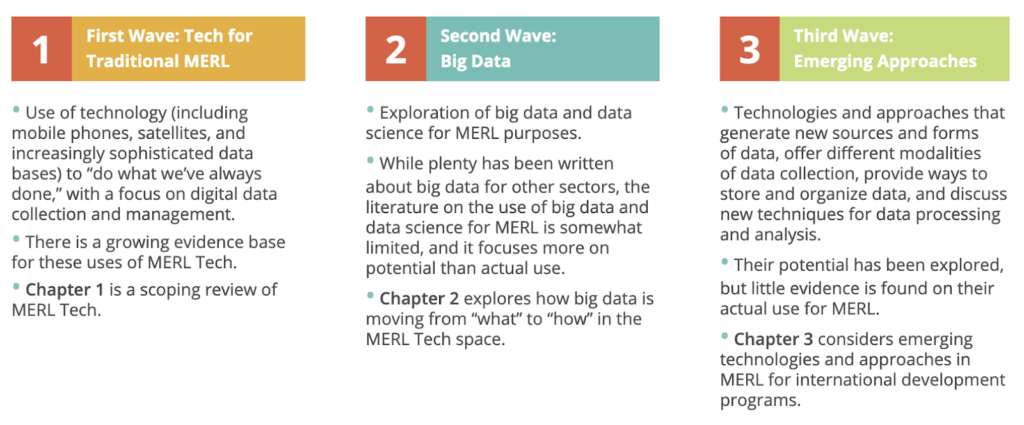

In 2019, a group of us penned a set of papers to document the State of the Field of MERL Tech where we gave an overview of the evolution of MERL Tech. Our analysis explored three waves of MERL Tech and considered how technological innovations had diffused throughout the sector over the past 5 years: Wave 1: Tech for Traditional MERL, Wave 2: Big Data and Data Science and Wave 3: Emerging approaches.

2020-2021 brought greater digitalization and a move to remote M&E

We published these papers in early 2020, right after the COVID Pandemic hit. The realities of the pandemic led to a big increase in access and use of mobile phones and mobile internet globally, and they also meant that organizations were forced to digitalize their programs and data collection. I wrote about the opportunities and challenges of remote M&E for ALNAP in 2021 including aspects of inclusion, safety and well-being, expectations and trust, data quality, and data protection.

While there’s been a return back to in-person MERL, another big shift has been the location of MERL functions. The pandemic, together with decolonization efforts and the move towards localization, pushed the sector to realize that we didn’t actually need a lot of people flying in from the US and Europe to do MERL. These trends are continuing.

2022: Chat GPT drops

Soon after, in 2022, the latest disrupting trends – Natural Language Processing and Generative AI – arrived. The MERL Tech Initiative launched our Natural Language Processing Community of Practice (NLP-CoP) in January 2023 in an effort to help democratize learning about and access to this complex technology; support peer learning for the wider MERL Tech community; offer space for playing around and testing these tools and approaches; and develop public goods to help guide responsible use of these new approaches.

2023: one year into GenAI hype

At the end of 2023, with a year of learning from running the NLP-CoP and immediately after attending the American Evaluation Association (AEA) conference, Zach Tilton and I made some observations and predictions about AI in Evaluation.

We wrote that that:

- Demand for AI-enabled evaluation learning was extremely high

- Concerns about using AI for evaluation were also high

- We didn’t really know yet what emerging AI can and can’t (or shouldn’t!) do for evaluation.

- GenAI is not vaporware, and evaluators needed to pay attention to it

- Organizations would rush to build AI-enabled evaluation machines

- We needed to refine research and upskilling agendas for AI in the sector

We also defined a set of questions for the MERL Tech sector:

- What does the ‘jagged frontier’ look like for emerging AI in evaluation? Can we achieve the same or better levels of efficiency or quality for certain tasks or processes when we use AI? Which ones? How could we measure, document, and share this information with the wider evaluation community?

- Where is automation possible and desired? Can emerging AI support high-level analysis tasks? How far can AI models go to create evaluative judgments? How far do we want AI to go? Where and when is automation a bad idea? Where and how do humans remain in the loop? How can humans and AI work together in ways that align with institutional or sector-level values?

- Where do current AI tools and models offer reliable results? How could large language models be improved so that they are more consistently accurate and useful for MERL-related tasks? What level of error are we comfortable with for which kinds of processes, and who decides? What about accuracy and utility for non-English languages and cultures? How should various aspects of inclusion be addressed? Where does this overlap with data privacy aspects?

- What does meaningful participation and accountability look like when using NLP for MERL? What meaningful ways can communities and evaluands be involved when AI is being used? How can we do this practically? Is it possible to get meaningful, informed and active consent when AI is involved? How?

- Can emerging AI fill a skills or experience gap? Could AI tools enable young evaluators to more equitably access opportunities in the professional evaluation space? What AI-related skills do experienced professionals, institutional decision-makers, and evaluation commissioners need to have?

While we’ve advanced quite a bit, most of these questions still need answering. We need more documentation of how AI / GenAI and NLP are actually being used in the sector, how are people using them for MERL, how well it’s working, and for whom. We still need some funding to do this documentation work to support the learning agenda.

GenAI adoption in 2024

Now, here we are in late 2024, after another year of learning with the NLP-CoP (read more on the MERL Tech news page) and a few more conferences under our belts. I’ve been thinking a lot about where the MERL Sector (and the wider social sector overall) is now with Generative AI, based on what we’ve learned by talking with and working with quite a lot of people this past year, including folks working on Evaluation specifically, those working more broadly on MERL, the digital development sector, humanitarians, data focused folks, and those working on digital social and behavior change.

Buckets of GenAI

I find it useful to think about the audiences for/users of GenAI applications in the social sector in three large buckets:

- Back-office focused GenAI that aims to improve internal efficiencies

- Front line staff-focused GenAI that aims to support staff (such as community health workers or enumerators) who engage with program participants and evaluands by offering better tools or improved knowledge and processes

- Community-facing GenAI that aims to directly engage or serve community members, program participants, clients, etc.

The effectiveness, utility, risks, costs, quality, etc., for the above types of GenAI applications vary immensely depending on a huge range of factors including purpose, context, language, existing data quality, data sensitivity, population involved, partners and vendors, and more. We need to get very specific when we talk about AI because there is a LOT of variety in how it can be used and how well it might work based on these and other factors.

GenAI on the back-end

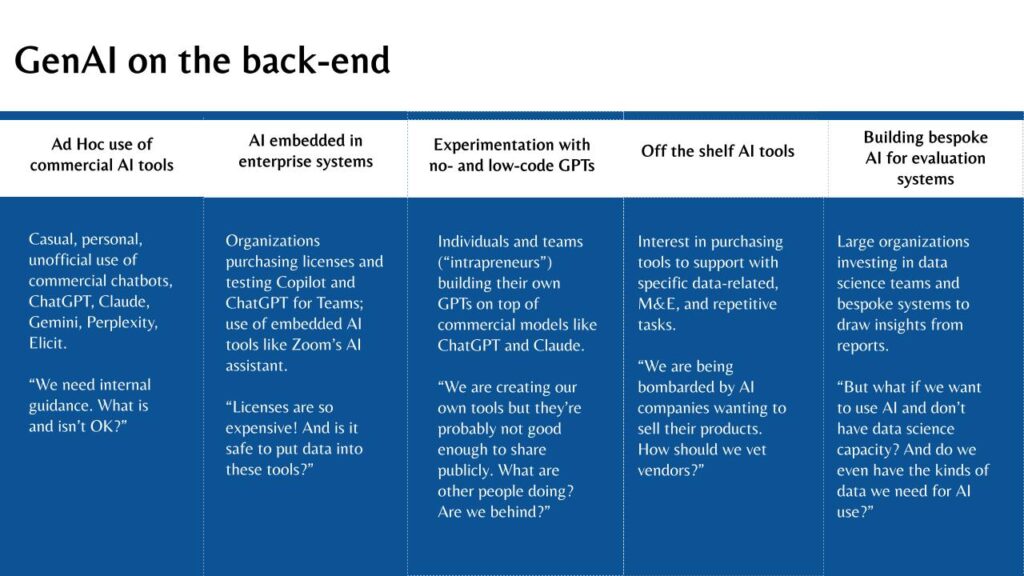

While it’s still early days for GenAI in MERL (and more broadly in the social sector), below is a typology of what I’m observing with regard to use of GenAI for Evaluation on the back-end.

Ad hoc use of commercial tools: Most organizations, firms, and independent evaluators seem to be in the first box – using these tools in a more or less ad hoc way to support their practice. This seems to also be the case with the social and behavior change sector, and the wider development and humanitarian sector as well.

AI embedded in enterprise systems: Microsoft Copilot is coming into more organizations and common enterprise tools like Zoom are adding GenAI into their products. So these are seen in some organizations, but many are still finding it too expensive to purchase licenses, so the use of Copilot, for example, tends to be just a few team members who are tasked with piloting it within enterprise systems.

Experimentation with no- and low-code GPTs: In 2024 there’s also been a lot of ‘intrepreneur’ movement with individuals creating their own GPTs to do specific things, using paid Team licenses for ChatGPT (which helps protect data). However many people are not openly sharing what they are doing. There seems to be a hesitation as people think their work is ‘not good enough’ or ‘not ready to share yet’. This makes it hard to understand what the use cases are that have the most potential and it’s hard to share resources and learning. We need more open collaboration!

Off the shelf tools: Many organizations report that off-the-shelf tools are proliferating, and they are being bombarded by vendors trying to sell them GenAI tools and products. There is not enough clarity among organizations on which tools are safe or effective for which purposes (or at all!).

Bespoke AI for Evaluation Systems: Finally we’re seeing large bi- and multi-lateral agencies with internal data science teams advancing with the creation of internal tools, processes, and AI-enabled systems designed to draw insights from large repositories of reports and other data. While there are still challenges with these, there is somewhat more open sharing of how it’s going. The World Bank for example is doing a fantastic job of sharing their learning.

Here’s a talk I did on November 5 for the European Evaluation Society that covers much of this, including some slides that MERL Tech Initiative collaborators Christopher Robert, Zach Tilton, Isabelle Amazon-Brown and I have developed over the past year. There are also fantastic presentations by ICF and the World Bank’s IEG on their more advanced uses of AI in evaluation. We’re running a workshop with the EES on December 13th where we’ll go a bit deeper. Sign up here!

The areas of front line staff focused and community-focused GenAI also moved forward in 2024 with exciting work and a lot of learning happening on multiple fronts. These uses of GenAI were often hampered by challenges with data and data quality, available languages, and all the old ICT4D challenges of access and uptake. In some cases, it seems that GenAI has made us forget everything we ever learned about technology design and adoption. In other cases, there have been fantastic co-design processes happening.

What will 2025 bring?

What 2025 will bring remains to be seen, but I suspect there will be continued adoption and progression with GenAI, and likely also some big failures and even some big wins. It feels like we are still at peak GenAI hype, and I think it’s going to move into a more measured phase in 2025, as more organizations test and trial applications and document (please please document!) what they are doing and learning. I have a sense that things will also start to consolidate around a few sector tools that are having better results (or maybe just better funding and marketing). As Chris Robert suggests, on the back-end we might see folks graduate from chatbots to more automated work flows which offer more transparency and reliability of outputs.

For front-line workers and communities, while the dream (just like for ICT4D) is to create GenAI tools for communities, we may see these applications continue to be limited by infrastructure, inclusion and accuracy challenges. While some organizations are tempted to charge forward with GenAI in these areas, it’s important to think about factors like phone capacity, device memory, cost of data, availability of electricity, and literacy. As we heard at the ICT4D conference in March in Accra, voice is likely the best way to scale GenAI in low resource, low literacy settings. The sector should also be paying attention to small AI, and locally/nationally built AI.

If we care about gender equity, we need to incorporate a gender lens (or to be even more adamant, a feminist lens) in the design and implementation of these GenAI tools or we risk yet another round of technological innovations that leave out half of the population and/or reinforce AI governance that cements inequality and long-outdated power dynamics. Finally, we should resist the temptation to create AI efficiencies that lead to cutting out front line staff and local enumerators. I hope we will focus our AI development on supporting front line workers and enhancing the quality of engagement with community members rather than on using it to put people out of a job!

What are you seeing?

Does this resonate with you? What do you think will happen with GenAI and NLP in the MERL space and the wider sector in 2025?

Leave a Reply

You might also like

-

Welcoming our new AI+Africa Lead for the NLP-CoP: Vari Matimba

-

Do you see what I see? Insights from an inclusive approach to AI ethics governance.

-

New guide: key questions to ask before using GenAI for research on violence against women

-

RightsCon Recap – Assessing humanitarian AI: what M&E frameworks do humanitarians need in the face of emerging AI?

Great Summary of the history of AI as it pertains to Evaluation , I look forward to the outcome of the workshop in December and I hope you will once again share a summary of the findings. Unfortunately for some individuals and emerging evaluators in Africa the cost of the workshops at this stage of year is not feasible.

Hi Elma, and totally understand. We’ll be doing some more workshops in 2025, with a focus on AI for MERL in African contexts. Stay tuned!