A visual guide to today’s GenAI landscape

Guest post from Christopher Robert, Founder, Researcher, Product and Tech Enthusiast. Chris is a MERL Tech Initiative Core Collaborator. This post originally appeared here on April 6, 2024. (Editor’s note: Also see this great post from Chris on the challenges of building reliable AI Assistants).

Since OpenAI’s ChatGPT release officially ushered in our current era of All AI All the Time (AAIATT for short), there’s been a nonstop flurry of developments. The emerging field has become flooded with a cacophony of new terminology, all of which comes off as really confusing to outsiders. I’ve put together a simple conceptual framework for some of the most common terms being thrown about, and I thought I’d share that here (also here in this slide deck). If it’s useful, great!

GenAI and LLMs

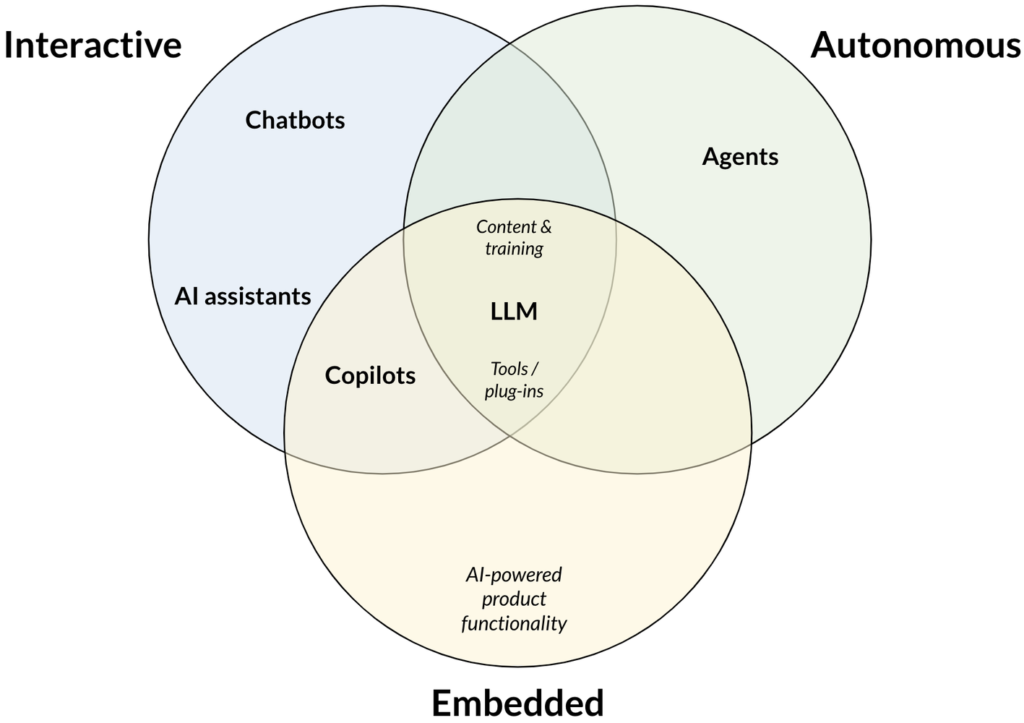

In this framework, I’ve tried to map the text-based generative AI landscape. Generative AI (GenAI) is all about AI systems that help you generate content, like text, images, or video. Here, I’m focused on GenAI for text, built on large language models (LLMs). ChatGPT is one example, and new LLMs are being released more or less daily at this point.

I’ll run through the map counter-clockwise from the top-left (and do see these slides if you want a quicker, more-visual version).

Chatbots

First, chatbots are text interfaces like ChatGPT that allow you to interact directly with an LLM. There are loads of chatbots out there now, including many fun and customizable ones like Poe and character.ai. These generally have a keyboard interface — where you type your question or comment and the chatbot responds in text — but increasingly chatbots include text-to-speech and speech-recognition tech that allows you to just converse with them verbally.

AI assistants

AI assistants are essentially chatbots that are focused on a particular subject or task. So, for example, I’ve worked on AI assistants for survey research and M&E as well as AI assistants for SurveyCTO users. You can use a generic chatbot like ChatGPT for lots of different things, but increasingly you have AI assistants that are better trained to help you with very specific tasks.

Copilots

Copilots are essentially AI assistants that are embedded within a single product, generally to help you use that product. GitHub Copilot, for example, helps programmers write code, embedded directly into the tools they use to develop software. Microsoft is also building copilots into Word, Excel, and all of its Office suite (though, confusingly, they also have Microsoft Copilot, which I guess is meant to be a copilot for, well, everything). Google and basically everybody else is doing the same.

What distinguishes copilots is not only that they’re embedded within products, but that they typically take advantage of the integration to make the AI more useful to you. So GitHub Copilot can see all of my code and directly make changes for me. Copilot in PowerPoint can create slides. Etc. The key thing is that it saves you a bunch of copying and pasting, allowing the AI to interact directly with the tools you’re using.

AI-powered product functionality

Now, the thing is that you don’t always interact directly with the AI. It can be powering product functionality in ways that don’t look at all like chatbots. If you’re analyzing qualitative data in Atlas.ti, for example, it can have it automatically code your data for you, and it’ll use an LLM behind the scenes — but you won’t actually see any of the actual back-and-forth with the LLM. The product basically talks to the AI for you, using it to perform tasks on your behalf. Meeting summaries from tools like Zoom, Teams, or Meet are other examples where the product uses the LLM on your behalf, and you might not even be aware.

Agents

Agents are AI systems that use LLMs in a multi-step, semi-autonomous way, in order to achieve some sort of objective. So Tavily AI, for example, helps you to execute desk research. You tell it what you want, like a one-page report on some arcane subject, and it’ll figure out the appropriate sequence of steps, execute those steps, and then give you the result. Generally, it’ll Google to find appropriate content, read, summarize, and analyze that content, and then put everything together for you.

What generally distinguishes agents is that they plan and execute multi-step sequences, in service of some objective. People are already giving agents access to lots of tools and unleashing them on increasingly complex tasks, which they break down into bite-sized tasks and set about executing, step by step. (This is one area where developments are particularly exciting — and unsettling.)

Infrastructure

Just a few final notes on the AI infrastructure at the heart of all of these systems:

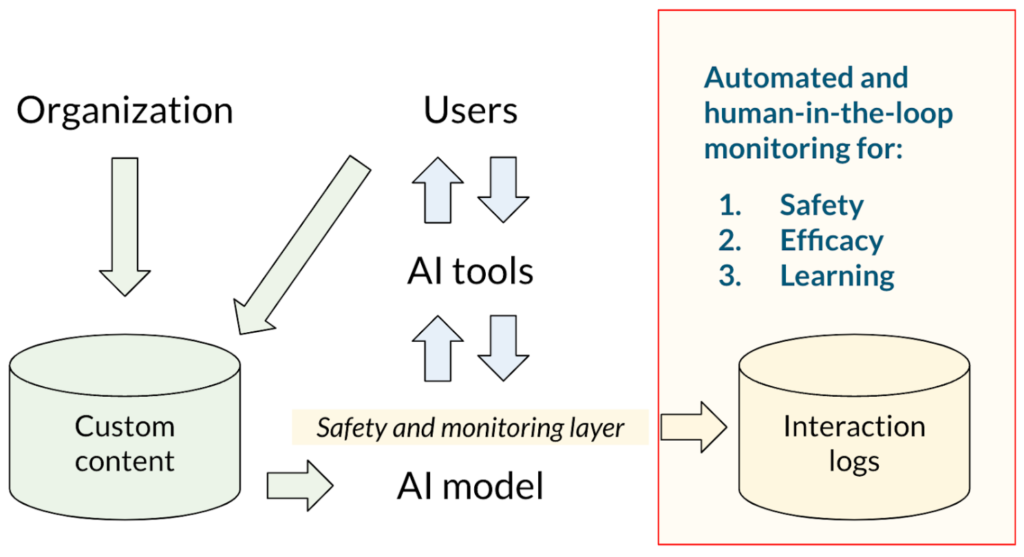

First, it’s really important that these systems be monitored for safety, efficacy, and learning. All of these systems should have (a) a secure (and generally anonymous) way to log interactions as well as (b) both automated and semi-automated systems for reviewing those interactions.

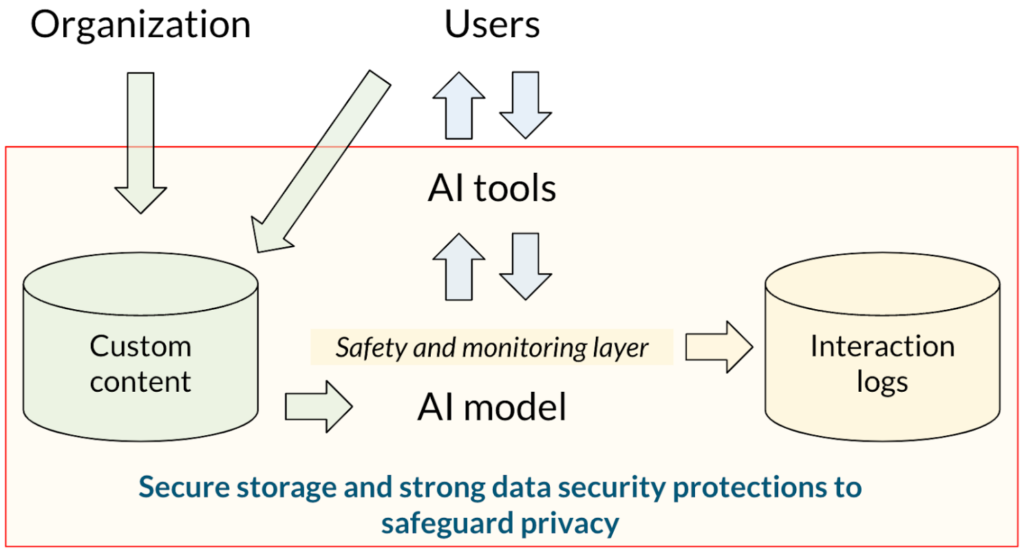

They should also take great care to secure both interactions and storage, to safeguard privacy.

In the rush to adopt exciting new technologies, these things can be easy to neglect — but only at our own peril!

Again: see here for the slides. And please comment below if you have any suggestions or feedback you’d like to share!

Note: all screenshots by the author and all images created with OpenAI’s ChatGPT (DALL-E).

Leave a Reply

You might also like

-

What’s happening with GenAI Ethics and Governance?

-

Join the AI and African Evaluation Working Group Meet ‘n’ Mix Session on May 7!

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors

Thanks for sharing this post, Christopher. While I agree GenAI systems trained on homogenous data sets struggle with real-world application, focusing solely on human intervention during interaction seems like managing the output after the fact.

The core issue lies in the training data itself: its cleanliness, diversity, and racial representation. Biases within the data are then perpetuated by the AI model’s logic and underlying algorithms.

By neglecting data from underrepresented groups, GenAI development misses out on valuable perspectives. This not only reinforces existing societal inequalities but also limits the field’s potential. We need to address these biases at the source – during data collection and model design – not as an afterthought.

Looking forward to your next post on analysis at these two levels.

Thanks for that comment, Shweta. Implicit in all of my work is a division of labor between those building foundation models and those adapting those models for use within various applications and settings. Those of us on the adapting side aren’t empowered — or, frankly concerned — to re-think how the foundation models are generated. Rather, we are focused on how to make those models as safe and useful as possible for different populations and use cases. In my case, the concerns you raise haven’t come up as relevant in, e.g., helping SurveyCTO users get questions answered from the docs, or helping users code complex expressions from natural language. That’s not to say that the concerns aren’t valid, just that I think they apply more in some circumstances than others.