When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors

On February 21st, 2024, we hosted a session titled “When Might We Use AI for Evaluation Purposes?” spotlighting the integration of Artificial Intelligence (AI) in evaluation practices. This session involved several of the authors of the November 2023 Special Edition of New Directions for Evaluation (NDE), which focused on AI in Evaluation.

Check out the webinar recording and speaker slides.

At the session, we aimed to dissect AI’s origins, its application in evaluation, the challenges of ensuring validity with large language models, and practical examples from the consulting world on leveraging AI. Our speakers were:

- Sarah Mason, NDE co-editor-in-chief and Director, Center for Research Evaluation at the University of Mississippi’s Center for Research Evaluation

- Izzy Thornton, Principle Evaluation Associate at the University of Mississippi

- Tarek Azzam, professor, University of California, Santa Barbara

- Sahiti Bhaskara and Blake Beckmann, Intention 2 Impact

- Bianca Montrose Moorhead, NDE co-editor-in-chief and Associate Professor, Research Methods, Measurement, and Evaluation; Director of Online Programs, Research Methods, Measurement, and Evaluation, University of Connecticut

Overview of the Special Edition on AI and Evaluation

Sarah introduced the topic, explaining that the special edition came about as a response to all of the excitement swirling around about Generative AI in late 2022. Gen AI is different than earlier kinds of AI because it produces totally new content based on the data it is trained on. Natural Language Processing (NLP) makes human to computer communication easier. The NDE editors (Sarah and Bianca) felt that these advances entail a clear “new direction” for evaluation. They will have very practical implications in terms of how we do what we do, methods we use, time and costs, ethics, and competencies. All of this will have implications on how we train evaluators. The NDE Special Edition on AI aimed to pull together a group of authors who could write about this emerging space for evaluation. Read Sarah’s NDE article.

AI and its relevance to Evaluation

Izzy offered a comprehensive breakdown of artificial intelligence’s foundational principles and its relevance to the field of evaluation, drawing from her background in sociology and applied data science.

She highlighted that machine learning systems can be both supervised and unsupervised learning models depending on the task they are set up to do. Unsupervised models are generally used to identify clusters or patterns that we might miss as human observers, whereas in supervised model, humans are making decisions on whether the patterns the computer is detecting are correct or not. Sometimes computers detect patterns but are not sure how to categorize them, so humans come into the loop to review. An interesting point here is that human supervision of models brings in a level of potential bias, validity checks and errors that cannot be standardized for the way that they can be with unsupervised models.

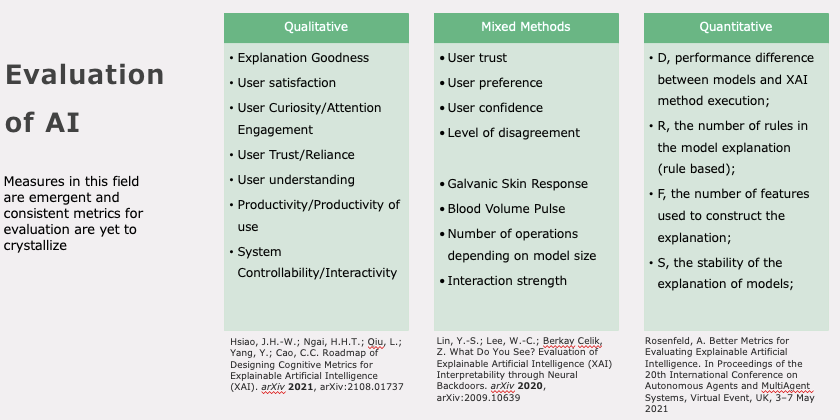

Izzy emphasized that AI models can only be as strong as the data on which they are trained and that all of these data points have been created at some point by humans. Data sets used to train AI models are enormous, and a number of websites and social media sites like Reddit are signing up to sell their data to be used to train these Large Language models. But these sets all carry bias that creeps into the models and their outputs. The models that machines generate are not always visible to humans, making it difficult to fully understand the decision tree. In addition to offering a number of real world example, Izzy laid out complexities and challenges evaluators face when integrating AI into their work and provided a framework of evaluating AI (see below image). See Izzy’s slides. Read Izzy’s article.

Practical examples of AI for Evaluation

Sahiti and Blake’s NDE chapter offers practical experiences and insights on experimenting with emerging AI tools within a small consulting firm. They emphasized the potential for AI tools to enhance efficiency and help to refine evaluation methodologies.

The team results from testing and comparing tools, including: ChatGPT vs organic search; QDA Miner for text retrieval, coding and document analysis; Fathom AI for data visualization and qualitative analysis; Rayyan for literature reviews; CoLoop for qualitative analysis of focus group and interviews; and Ailyze for qualitative thematic analysis and generate text summaries.

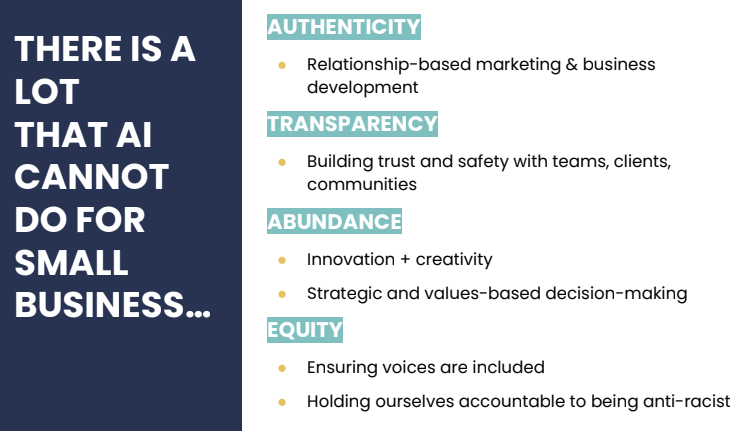

They found that these tools helped them work more quickly, discern deeper insights than manual analysis, improve accuracy and comprehensibility, allow for secondary analysis, accommodate multiple data types, and allow a small team to take on larger projects. They encountered a number of challenges as well, including: variation in results, over indexing of data, prohibitive costs of AI tools, potentially less intimate understanding of cultural themes and contexts, and lack of a research base on accuracy. Key things that AI cannot do were also highlighted as seen in the image below. See I2I’s Slides here, read I2I’s article here.

Addressing integrity and validity

Tarek exploree AI and validity when using large language models (LLMs) in evaluation. A key point he made is that high quality evaluation is not a technocratic exercise. It requires a lot of back and forth; awareness, knowledge, and understanding of the context; and being able to generate insights around effectiveness of a program that can be backed up in valid and conclusive ways.

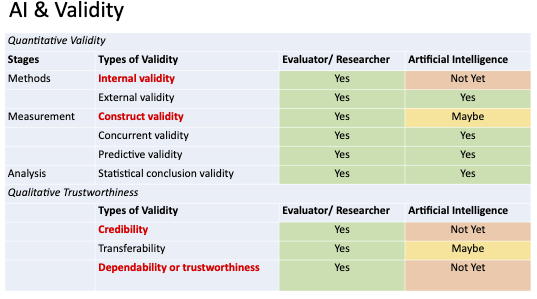

Tarek shared a list of validity aspects that evaluators look at to support claims made with qualitative and quantitative data. He focused on the importance of being able to make causal claims. To ensure the validity of a causal claim requires an in-depth understanding and background of context. AI is currently unable to do that. Similarly, construct validity requires establishing whether we are measuring what we hope to be measuring. Currently, it’s unclear whether AI can help with this, but it might be able to do this in the future. AI is able currently to make some predictions with a level of validity.

In terms of qualitative validity, AI is good at pattern recognition and summarizing but it is limited in terms of establishing credibility of findings and trustworthiness. Evaluators are often trying to understand honesty in responses, power dynamics during a focus group discussion for example. AI is currently not able to perform well in terms of qualitative trustworthiness. (See the image below). While AI can enhance evaluative practices Tarek cautioned against the uncritical acceptance of AI-generated outcomes. See Tarek’s slides. Read Tarek’s article.

Establishing evaluation criteria for AI applications

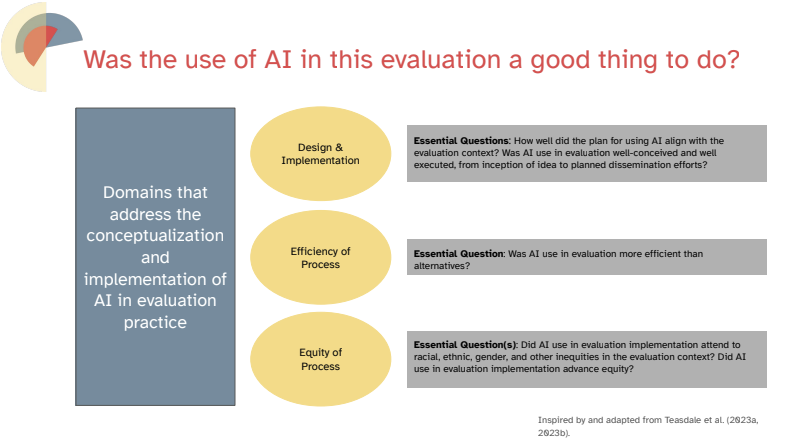

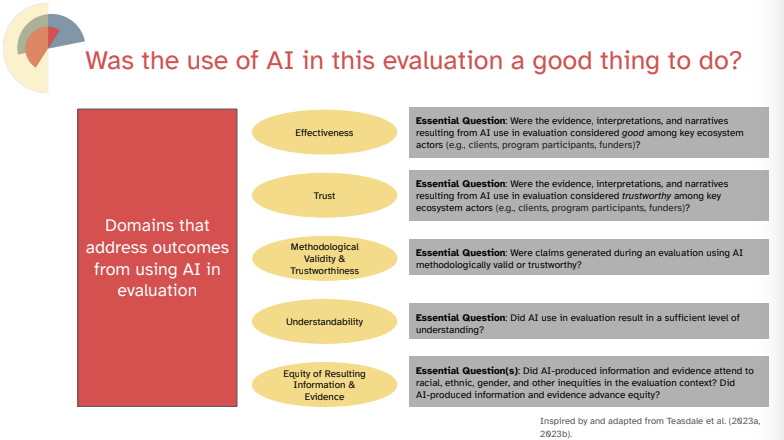

Bianca closed out the session by providing a framework for the usefulness and limits of AI in Evaluation. She first pointed out that criteria identify and define the aspects on which what we evaluate is judged (Davidson, 2005; Sriven, 2015). While the field has clear evaluation criteria (covered in -depth in her chapter), there is currently no criteria to assess the use of AI in Evaluation.

In order to provide a set of criteria, she reviewed the full set of articles in the Special Edition, looking for clear and emerging criteria and gaps related to judging AI’s use and outcomes in evaluation work. Her framework and criteria are shared below. She emphasized that these are meant to be a first step in the process, and encouraged the MERL Tech Community to provide leadership on the use of AI in evaluation as well as other fields that require rigor. Her article can offer guidance on key questions that we should take into consideration. See Bianca’s slides. Read Bianca’s article.

Points raised in the chat

In addition to speaker points, a lively discussions happened in the chat. Some points that participants raised throughout the session included:

The issue of data quality and its impact on AI/ML models is a real concern, especially for organizations working in data-poor spaces. It would be helpful to have use cases where organizations have deliberately addressed underlying data inequities before deploying AI/ML tools for social impact work. Most users and evaluation practitioners don’t have the ability to truly interrogate the data that foundational AI/ML models are trained on, despite the availability of model cards. As practitioners, we need to develop our literacy around this issue and establish technical guardrails in AI tools to mitigate the risks of biased data.

Ethics, privacy and reliability of AI-powered qualitative analysis and transcription tools, like CoLoop, MaXQDA, and Atlas.ti, raised questions around ethics, privacy, and the reliability of the analysis outputs. There were questions about the ethical implications and privacy safeguards, as well as trust issues with the analysis generated by these tools. One person said they prefer to do coding and clustering manually for this reason. The discussion touched on the need for informed consent and data policies when using these tools.

Performance of AI-enabled qualitative analysis tools in non-European languages was also a key concern. Some people were curious about how these tools would work with interviews and focus group notes in languages like Mandarin, Hindi, and Arabic, as opposed to the more commonly used European languages. One person shared an example of poor performance of ChatGPT in the Lao language, highlighting the challenges of low-resource languages.

The risk of these AI tools “scrubbing” out the richness and outlier opinions within qualitative data, as they focus on identifying themes and consistency. One of the speakers noted that the CoLoop tool is designed to include those outliers, even if they were only held by 1-2 people, and that the tool is most useful after the human team has done an initial analysis to be aware of the unique perspectives.

The tension between rigor, time, and breadth in evaluation practice, and the promise of AI to make the process both rigorous and fast. There is skepticism and a fear that the competitive nature of the industry might lead to premature use of AI tools, potentially risking analytical quality. This raised the need for developing responsible AI practices and policies in the evaluation community.

Wrapping up

The hour was packed with information on AI and NLP for evaluation and the ethical considerations that come with its adoption. As the field of evaluation grapples with these emerging technologies, the discussions highlighted the critical need for evaluators to better understand AI in order to leverage its benefits fully while mitigating its challenges.

Check out the NDE Special Edition for more on these topics. While the journal is behind a paywall, you can access it freely if you are a member of the American Evaluation Association.

You can also watch the full webinar and see the speakers’ slides here. Join the NLP-CoP if you’d like to get notified of future learning sessions like this one!

You might also like

-

What’s happening with GenAI Ethics and Governance?

-

Join the AI and African Evaluation Working Group Meet ‘n’ Mix Session on May 7!

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

A visual guide to today’s GenAI landscape