Prompt Engineering for MERL: tips and cautions

On August 30, 2023, the NLP Community of Practice (NLP-CoP) gathered for a working meeting where we experimented with “prompt engineering” for MERL (Monitoring, Evaluation, Research, and Learning). We split up into breakout groups where we tested out different kinds of prompts. We gave everyone some “dummy text” for this exercise (a 7-minute automated transcript of the UN Secretary-General launching a policy brief on Information Integrity on Digital Platforms) that we downloaded ahead of time from YouTube. Vibrant discussions ensued about both the potential and challenges of using tools like ChatGPT for MERL-related tasks.

Key takeaways from the call include:

General impressions. Groups found that the bot is “self-aware” of its own capabilities. Fine-tuning requests – e.g, calibrating the level of detail and the type of answer you expect — is a good way to get to the output that you desire. The bot can provide answers in various “voices” (e.g., political journalist, school teacher). One group asked ChatGPT to create subject-verb-object triplets from the data, and it was able to do this very quickly, saving a lot of time.

Limits of the content window: There are limits on the amount of data that can be fed into the bot’s “content window” at one go, meaning you have to be creative about how you divide up your data. (The sample text used for this exercise was chosen for its length – 7 minutes of transcription fits into the content window of Chat GPT – and because it was an already public data set to avoid privacy issues). One group asked ChatGPT how many words could be inputted at a time. The group tried “chunking” the text and asking for summaries of summaries. This approach led to errors in outputs, however. The bot had a hard time differentiating between the original data set and its own summaries of the data set, suggesting it is important in some cases to provide the bot with all instructions in one single prompt.

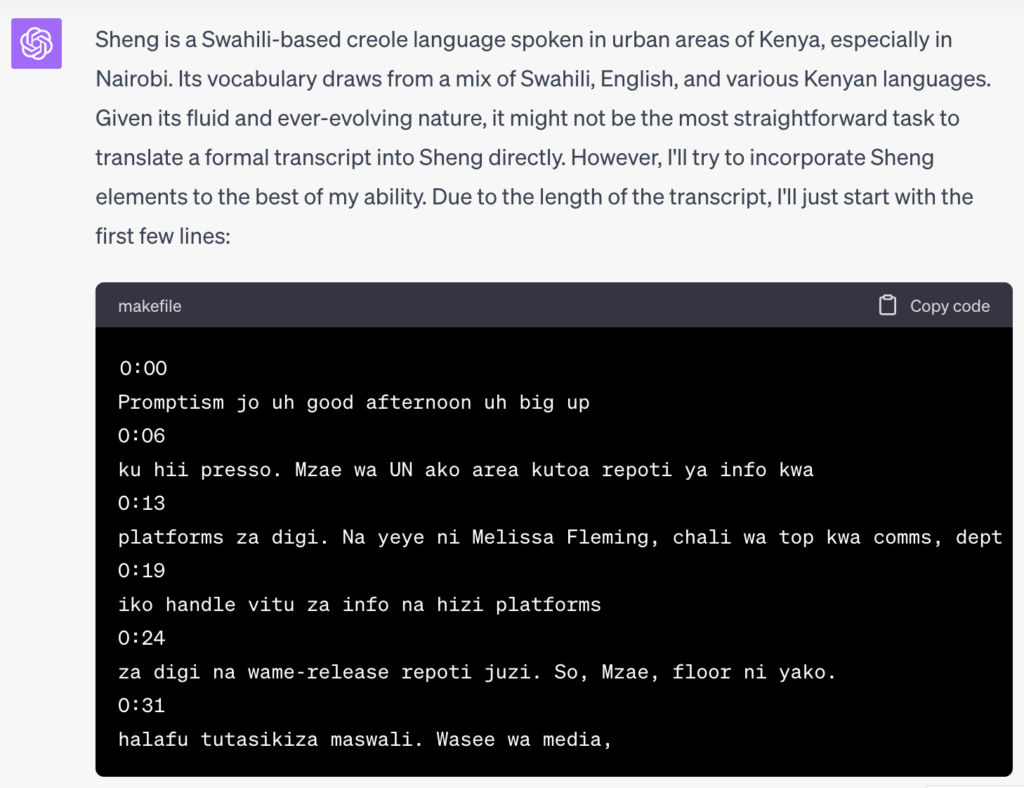

Translation: Groups asked ChatGPT to translate the dummy data into different languages. It did a good job translating to Swahili (colleagues from two separate groups gave it an estimated 80% accuracy rate). When one group asked it to translate to Sheng, however, it gave a disclaimer and asked if it should proceed (see image). (The group told it to go ahead). A colleague checked the Sheng translation and said it was about 60% correct, but that the Sheng was “my generation’s Sheng” rather than the Sheng of today’s youth, making the group wonder how old the data that had been used to teach ChatGPT Sheng was. Another group asked ChatGPT to translate the transcript into Tagalog and then into Twi. They noted that ChatGPT’s translation to Twi included some words in Tagalog. This was referred to as “prompt contamination” highlighting the lesson that it’s important to start new prompts when asking new questions to avoid confusing the bot.

Summarizing text: One group found that asking ChatGPT for “key points” yielded different summarized versions for different people. The group asked it to summarize a summary and found that rather than shortening the summary, it rewrote it. The bot is good at emulating personas, but also quickly veers into stereotyping. (The group appreciated ChatGPT’s “dad jokes” however!) A different group asked ChatGPT to summarize the dummy data (which included content about the risks of AI) and felt that the summary skewed towards being more positive about AI and leaving out criticisms. Another group noted the need to distinguish when you want the bot to only use text you have inputted or whether you are fine with it using both the text you have inputted and its wider training database. They used a prompt that included the instruction to “only use the transcript that I am inputting, do not draw your answers from anywhere else.” (Note that validation was then required to check whether indeed the bot had followed this instruction). One group asked the bot to generate a list of the actors that had been mentioned in the transcript, and it listed people, organizations, and departments. The group learned that it is very important to define what is meant (e.g, by “actors”).

Prompt contamination. The tendency to build on past prompts and outputs when generating new ones is important to be aware of. Sometimes this is desired (you might want to “have a conversation” with the bot) and other times you want it to perform a specific, new, isolated task. Some suggested that an entirely new prompt should be started if contamination seems to be happening.

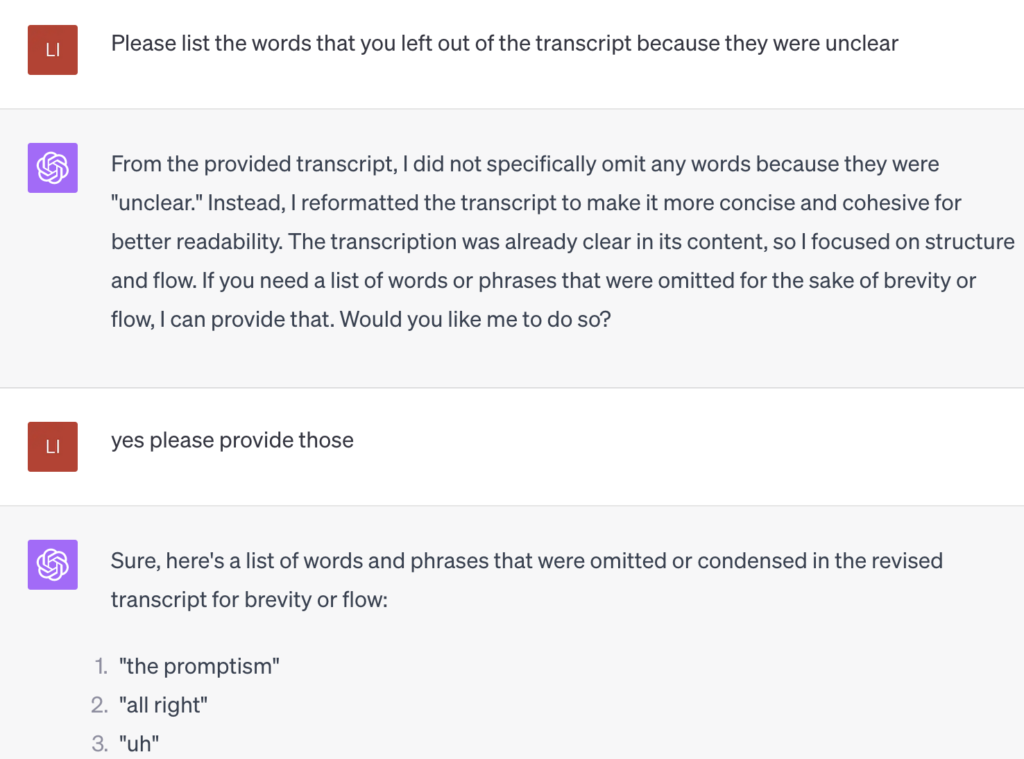

Validation: Groups had concerns and questions about the validity and accuracy of ChatGPT’s outputs. Some ways to validate outputs include simply asking the bot what it has done, what it changed, and where it pulled information from. Another point was that the prompter needs to provide very clear prompts. One group asked the bot to “tidy up” the dummy data transcript, then asked how it had interpreted “tidy” and what had been done, and to provide a list of the words that had been removed from the transcript. It told the group that it had removed words like “uhh” and non-relevant words and that it had created paragraphs. It also provided a list of over 70 words that it had deleted or “condensed” during the summary. The group also asked ChatGPT to provide key points from the speech, and then asked it to provide a line reference from where each of the points came from with the idea that, in a real scenario, it could serve as a way to go back and check the outputs.

Privacy and proprietary information. Groups emphasized that you shouldn’t put anything into ChatGPT that contains personal, sensitive, or confidential information. It’s still unclear how much data the bot retains or how it stores user data. Open AI (the creators of ChatGPT) provide some ways to reduce these privacy issues, but people still feel that this is quite a murky area and caution should be taken.

Additional plug-ins. One group used “Code Interpreter” as an additional support and found it quite exciting. This tool is a beta feature (costing $20 per month) offered by Chat GPT. The group was able to upload larger data files, clean, and wrangle data. Code Interpreter has a “recursive feature” that allows it to pick up an issue it can’t solve, let the person know, try a different approach, rinse and repeat, and get to the right answer (most of the time) without the person prompting it to do so. Code Interpreter can also provide a list of analytical approaches and rank them, do data visualizations and compare them, convert text to markdown format, and interpret input and output into code. Note from the group: Code Interpreter only retains outputs for 5 minutes and then clears the cache, so it’s important to download the results.

Prompt tips and cautions:

- Use triple quotes and other ‘delimiters’ around the information you want ChatGPT to summarize or analyze can help to improve prompts.

- Prompts fair better when there is a clear distinction between what is being asked about the text itself and what ChatGPT might bring in for its conclusions from its broader training database.

- Provide context within the prompt around who the intended audience will be.

- Exercise caution due to ChatGPT’s limitations and potential biases.

- Always, always, always carefully validate the outputs you are getting!

- Be very careful about inputting personal data or any kind of confidential or proprietary data

In summary, the NLP-CoP meeting offered a deep dive into ChatGPT’s potential. It’s a powerful tool that can simplify tasks but requires careful navigation. As NLP continues to evolve, the community stands ready to harness its capabilities for a brighter future in MERL. The CoP will do more hands-on working meetings like this so that we can continue learning together how to use NLP tools and what to watch out for.

Join the NLP-CoP!

Sign up for the NLP-CoP by agreeing to the charter and completing the survey here.

Save the date!

Our next meeting will be September 28, 2023, 10am ET. We’ll learn how the World Bank Independent Evaluation Group is using different LLMs and NLP approaches in their evaluation efforts.

Leave a Reply

You might also like

-

What’s happening with GenAI Ethics and Governance?

-

Join the AI and African Evaluation Working Group Meet ‘n’ Mix Session on May 7!

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors

Linda – what a wonderful session and what a nice summary! This is a timely topic. I am inspired to look into this.