What has the World Bank’s Internal Evaluation Group learned from experimenting with NLP?

Over the last few months, the World Bank’s Independent Evaluation Group (IEG) tested and compared various Natural Language Processing (NLP) and Generative AI tools — including ChatGPT, GPT-4 via API, mAI (the World Bank’s enterprise version of GPT-4), and Google’s LaMDA powered Bard chatbot — to understand their potential and limitations across various applications throughout the organization. These tests were designed carefully.

The team looked at the available literature, used only publicly available data, and closely scrutinized outputs, ensuring that they were comparable. The tests were also designed with varying users in mind including data scientists, analysts and evaluation managers with no specialized knowledge of data science.

On September 28, Estelle Raimondo and Harsh Anuj shared their learning from these experiments with the NLP-CoP community, detailing what worked and didn’t work for their team.

What Worked Well

Document Summarization: The team tested whether Chat GPT could make sense of large text reports. GPT demonstrated an ability to distill these reports, based on key sections like the table of contents and executive summaries. However, ChatGPT has restrictions on the amount of text that can be inputted and maneuvering around these “token limits” posed challenges, leading to questions about the method and the API’s suitability for this task.

Coding Assistance: The team tested ChatGPT and GPT, to see if they could provide coding support. The team found that the tools could generate code, add comments to an R script, provide detailed descriptions of user-defined functions within the code, and summarize the code. ChatGPT in particular provided excellent responses when given detailed instructions.

Sentiment Analysis: The team looked at how well the tools could understand emotions in text, (positive, negative, or neutral) as compared to results for data they had previously labeled by hand. One of the tools, GPT did very well (95% accuracy). This was expected, as understanding general emotions in text is something a tool like this should excel at. However, ChatGPT could only check 50 documents at once, and sometimes it would even invent (“hallucinate”) new sentences! Given this, the team found that it’s better to use the API, which could handle all the data at once. Another finding was that, if using a chatbot, it’s better to start fresh with each new piece of text to avoid errors.

While sentiment analysis can be challenging due to differences in meaning across languages and culture, in this case the documents were written in “bureaucratic World Bank language” and so the test was somewhat standardized across the documents making the sentiment analysis easier to perform with greater accuracy.

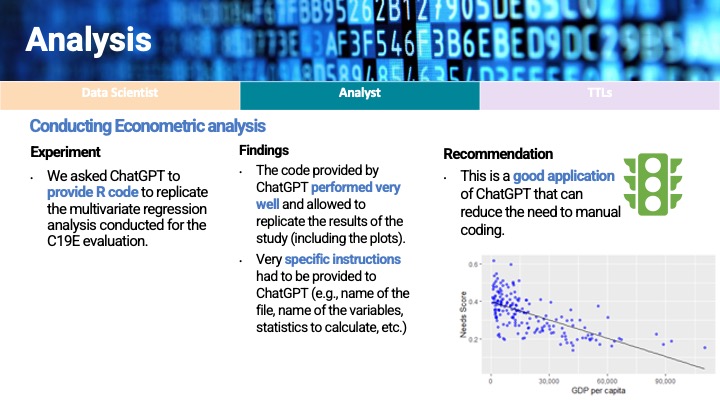

Econometric Analysis: The team turned to ChatGPT for assistance in crafting R code for a multivariate regression analysis for IEG’s COVID-19 evaluation. ChatGPT facilitated the replication of the team’s findings when given specific instructions for the task. ChatGPT eliminated the need to write complex code from scratch. However, users still need to have a very a clear understanding of the desired code or expected outputs before asking ChatGPT to perform this function, so that accuracy and efficiency can be ensured.

Post-Analysis: The team also experimented with capabilities for post-evaluative tasks. Specifically, they tasked ChatGPT with summarizing a hefty 200-page Country Program evaluation report. The outcome? A concise, well-articulated, and accurate overview that captured the report’s main themes. Such tools, like ChatGPT, can be invaluable for professionals who are already well-versed with a document’s content. This capability allows for streamlining the process of drafting a summary, conserving both time and effort.

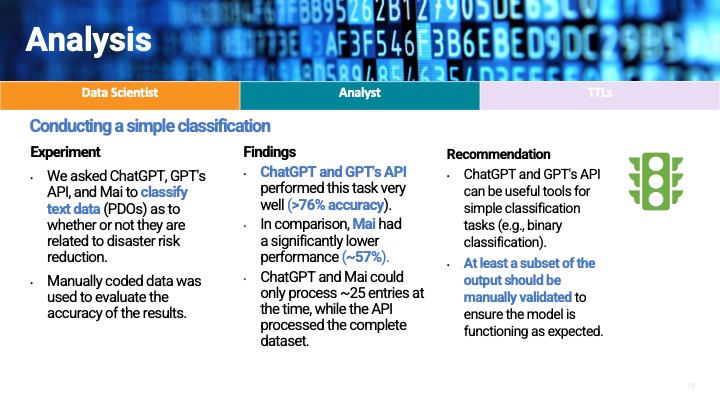

Text classification: Three different tools – ChatGPT, GPT API, and mAI – were used to sort text related to disaster planning. When compared to manually checked results, ChatGPT and the GPT API were correct about 76% of the time, while mAI was right 57% of the time. The team hasn’t analyzed the 24% of erroneous results to understand what GPT and the GPT API are unable to get right, noting that this is currently less of a priority due to a range of additional safeguards they’ve put in place to avoid making decisions based on bad classifications. For examples, the team also did check-ins, manual reviews and shared the findings with the program team. The team also focused on reducing false negatives by reviewing all findings.

What Didn’t Work:

Generating images for geospatial analysis: The team often uses images as a data point to note changes in a desired area. To do this, they need to classify different parts of the images. Training the model requires a large number of synthetic images. The team asked DALL-E to generate urban images, specifically capturing the urban essence of Bathore, Albania. They explored two methodologies: generating visuals from text prompts and generating them from image uploads. To gauge DALL-E’s effectiveness, the team compared its outcomes to pre-existing data and prior analyses. DALL-E can only offer four images per prompt and the team normally requires hundreds or thousands of images. This constraint posed efficiency concerns. Additionally, assessing the resemblance of DALL-E’s outputs with real-city visuals proved challenging, adding an extra layer of complexity to the validity of the tool in our context.

Literature reviews: The team also tested writing a brief literature review on the advantages and challenges of using business indicators. While ChatGPT provided a longer, more detailed response, there were challenges in verifying the authenticity of the information provided and potential “hallucinations” in the content. The platform might not be the most suitable for thorough literature reviews, yet it can offer valuable background knowledge on topics. Users should double-check outputs against reliable sources. A peculiar observation was the model’s ability to generate seemingly credible references, complete with journal names and page numbers, that, upon investigation, turned out to be non-existent. Resetting chat sessions in between prompts was highlighted as beneficial, especially during prolonged interactions.

Evaluative synthesis: The team used ChatGPT to ingest text from 6 Project Performance Assessment Reports (PPARs) and generate an evaluative synthesis. When compared to an actual produced synthesis, ChatGPT’s version demonstrated good writing and some accurate high-level messages. However, a significant concern arose when the AI-generated content included convincingly fabricated evidence that wasn’t in the original base documents. Hence, while ChatGPT might be suitable for synthesizing information from single documents, the team advise against merging evidence from multiple sources, especially in extended iterative conversations. This recommendation is particularly pertinent if the user isn’t deeply familiar with the original evidence base. Moreover, upon challenging the model on its inaccuracies, it exhibited a defensive stance, raising further concerns about its applicability in such contexts.

Tips:

- The team practiced ground truthing with some of the models to see if they were aligned. They tested and compared with other models and incorporated humans in the process at all stages, never relying completely on outputs produced by the model.

- The team found that these models perform well in tasks that have a clear, verifiable output, especially in areas like coding and code explanations. However, when it comes to more intricate tasks, the AI can sometimes produce unintended or “hallucinated” results proving to be too large of a risk for certain jobs.

For more details on the team’s experiments, see the IEG’s blog series Experimenting with GPT and Generative AI for Evaluation:

- Setting up Experiments to Test GPT for Evaluation

- Fulfilled Promises: Using GPT for Analytical Tasks

- Unfulfilled Promises: Using GPT for Synthetic Tasks

Register now for the NLP-CoP’s upcoming open meetings:

Thursday, October 26, 2023 – Join the P&A Working Group for a session led by Cheri-Leigh Erasmus, Co-CEO and Chief Learning and Agility Officer from Accountability Lab to share reflections from their HackCorruption program.

Tuesday, November 14, 2023 – Join the Custom Content Working Group for an exciting tutorial where they will dive into the world of Natural Language Processing (NLP) and create a simple yet powerful Question-Answering app using Streamlit, GitHub Codespaces (TBD), and the Hugging Face Transformers package.

Join the NLP-CoP!

The NLP-CoP welcomes all Monitoring, Evaluation, Research and Learning (MERL) practitioners and data practitioners interested in using text analytics approaches for MERL in the development, humanitarian, peacebuilding, and related social sectors. All MERL practitioners are welcome, no prior knowledge of text analytics is needed. Specialist data scientists and NLP specialists are also welcome. Working Group meetings will cover both technical and non-technical aspects; e.g. ethical and inclusive use.

To join please fill in this form indicating your agreement and alignment with the NLP-CoP Charter and Code of Conduct.

You might also like

-

What’s happening with GenAI Ethics and Governance?

-

Join the AI and African Evaluation Working Group Meet ‘n’ Mix Session on May 7!

-

Hands on with GenAI: predictions and observations from The MERL Tech Initiative and Oxford Policy Management’s ICT4D Training Day

-

When Might We Use AI for Evaluation Purposes? A discussion with New Directions for Evaluation (NDE) authors